Ripley Leads the Use of AI in Campaign Optimization — and Proves It with an A/B Experiment

For CMOs and Heads of Marketing, one of the most pressing challenges is maximizing advertising budget performance in an environment with an ever-growing number of campaigns and channels. AI-based tools offer a promising alternative for optimizing paid media investment, but marketing leaders naturally demand concrete evidence of their impact. In this article, we share the key learnings from a recent A/B test conducted on Ripley’s online campaigns.

Experiment Methodology

Hypothesis

The core hypothesis was that AI-driven optimization would generate higher growth in revenue and/or ROAS compared to manual optimization. In other words, we expected the optimized group to outperform the control group in incremental sales or efficiency (or both).

Duration

The test was conducted over a four-week period.

For both groups, the following metrics were measured:

- Net revenue (total sales generated, net of returns)

- Spend (advertising investment)

- ROAS (Return on Ad Spend, calculated as Revenue / Spend)

The lift in these metrics was measured relative to a baseline period.

Methodology

To assess the impact of AI-driven budget optimization, a controlled A/B test was implemented. Ripley’s campaigns were split into two comparable groups:

- Optimized Group, managed by Mixilo, our Paid Media Optimizer

- Control Group, managed under the business-as-usual manual budgeting approach

In the Optimized Group, Mixilo dynamically reallocated budget across campaigns, modifying only spend levels. Periodic checkpoints were conducted to validate business constraints, but beyond that, the AI had autonomy to redistribute budgets according to its algorithms.

In the Control Group, Ripley’s marketing team continued to manage budgets manually. Analysts made periodic adjustments following their usual process and recorded their own projections for spend, revenue, and ROAS for each change.

Key Considerations

- Care was taken to ensure both groups were as similar as possible: a comparable number of campaigns, similar total investment levels, and identical market conditions (with no seasonal events or promotions that could bias the results).

- Throughout the experiment, targeting and creatives were kept constant across both groups.

- The groups did not compete for the same users, as each focused on different product categories, in order to isolate the effect of the test.

- The control team did not have visibility into the actions of the AI-optimized group, preventing any form of imitation or bias.

Results: Uplift from AI-Driven Optimization

Spoiler alert: campaigns optimized with AI outperformed manually managed campaigns.

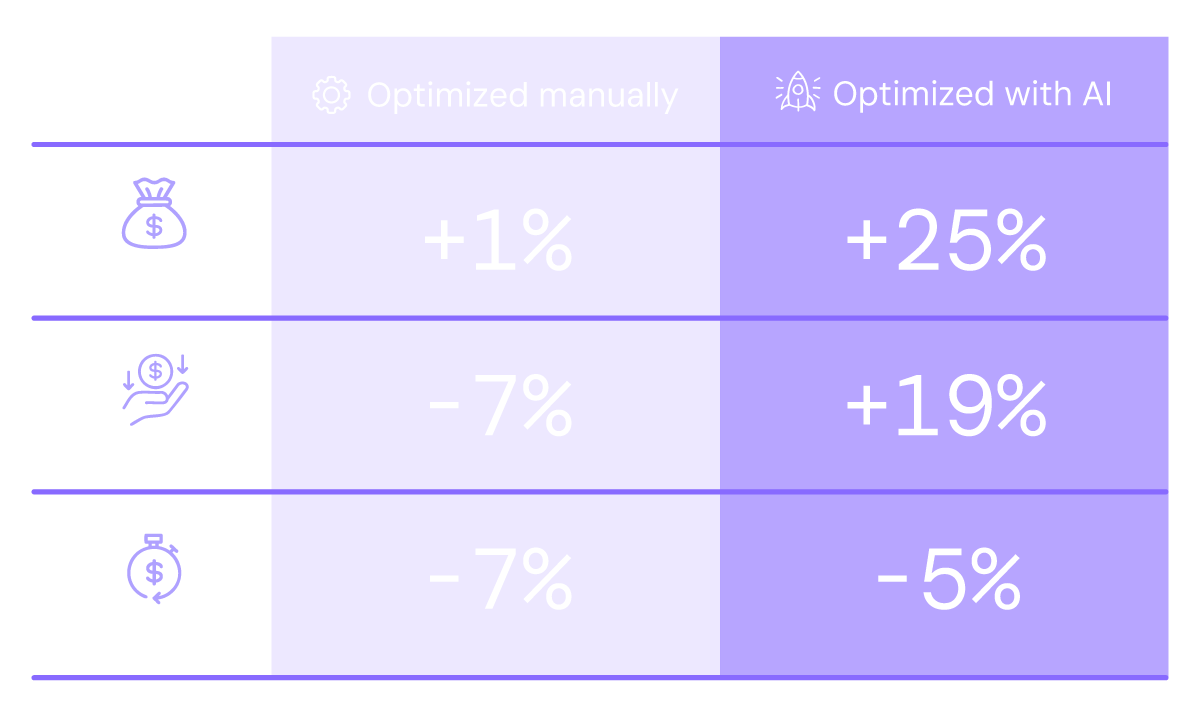

Revenue

The AI-Optimized Group achieved a 19% increase in net revenue compared to its previous period, while the Control Group recorded a 7% decrease.

Advertising Spend

The AI-Optimized Group increased advertising spend by approximately 25% compared to the previous period, while spend in the Control Group increased 1%.

This occurred primarily because the AI identified opportunities to invest more in high-performing campaigns. Mixilo effectively “found” additional budget to allocate where it was generating profitable returns, while the control group kept budgets more stable.

Return on Ad Spend (ROAS)

Return on ad spend remained solid in the AI-optimized group despite the aggressive increase in investment.

ROAS

The AI-Optimized Group concluded the experiment with a ROAS approximately 5% lower than the baseline. However, this slight decline represented a reasonable trade-off given the strong increase in total revenue.

In contrast, the Control Group — which barely increased investment — also saw its ROAS decline by approximately 7%.

In other words, the control group lost efficiency without capturing growth, while the AI-driven strategy delivered growth at a marginal cost to efficiency. By the end of the test, the optimized campaigns generated significantly more sales with only a slightly lower ROAS. This outcome supports the hypothesis that an AI-driven budgeting strategy can outperform traditional methods in both revenue impact and spending efficiency.

Interpretation of the Results

These results illustrate how an AI-based optimizer can uncover value that often goes unnoticed under traditional budgeting approaches. The AI did not simply spend more to sell more — it spent incrementally.

Efficient budget allocation depends on marginal ROAS — that is, the return generated by the next dollar invested. The optimizer redistributed spend algorithmically until marginal ROAS was balanced across campaigns.

The analysis showed that, in the AI-Optimized Group, the marginal returns of most campaigns converged toward an optimal range, meaning that each additional dollar was generating the maximum possible value. By contrast, in the Control Group, campaigns exhibited misaligned marginal returns: some were overinvested (with diminishing returns), while others were underinvested (with missed opportunities).

Put differently, the team continued to invest in campaigns that had already reached their saturation point, while the AI avoided this issue by continuously reallocating budget toward higher-return opportunities.

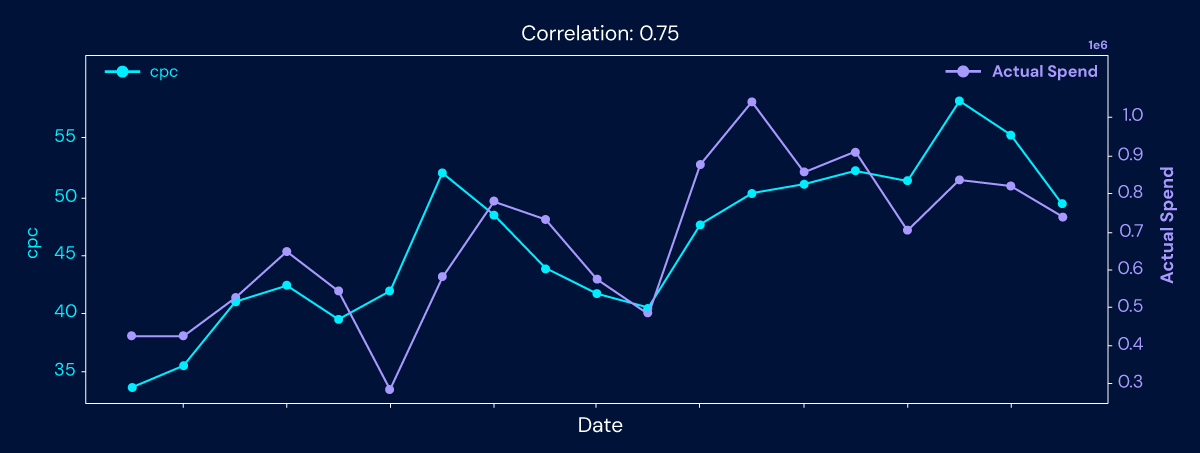

Another key insight was how the AI responded to market signals. Naturally, cost per click (CPC), click-through rate (CTR), and conversion rate (CVR) fluctuated day by day. During the experiment, we observed that the budget adjustments made by the AI were strongly correlated with changes in CPC, CTR, and CVR. (This is because when CPC increases, higher investment is required to maintain the same target ROAS.)

Figure 1: CPC vs. actual spend for the AI-optimized group.

Figure 2: CPC vs. actual spend for the control group.

Adaptability is one of Mixilo’s core strengths: it can detect and capitalize on short-term windows of opportunity (or avoid waste in unfavorable conditions) far more quickly than a typical weekly or monthly manual planning cycle.

Trade-offs: Efficiency vs. Volume

It is worth highlighting the strategic decision the AI made in this experiment: it prioritized higher volume at the cost of a slight decline in efficiency. The 5% decrease in ROAS for the AI-Optimized Group indicates that diminishing returns began to emerge as investment increased—something that is entirely normal in advertising (each additional dollar typically generates a slightly lower return than the previous one).

Implementation

Ripley’s team did not simply bet on innovation—they did so with the rigor required to validate results, manage internal change, and scale learnings. This combination positions them as leaders in the responsible and effective adoption of AI in paid media.

Based on their experience, we highlight three key principles for a successful implementation:

Validate through experimentation

Treat changes in your marketing strategy as hypotheses that must be validated. Start with a pilot experiment on a subset of campaigns and evaluate lift against a control group before committing large budgets to any AI or MarTech solution. This controlled approach, like the one applied by Ripley, allows teams to move forward with evidence and build organizational trust.

Manage change and build trust

Introducing a tool like this is not just a technical implementation; it also requires preparing the team for a shift in how work gets done. Campaign managers move from manually adjusting budgets to supervising AI-driven decisions. Some resistance from analysts is natural—especially when the tool proposes bold moves, such as 25% budget increases. To build trust, it is critical to show how the tool frees up valuable time to focus on strategy, creativity, and innovation, while the AI handles the operational burden of continuous optimization. In addition, tracking the accuracy of the AI’s predictions helps teams confirm that the model reliably anticipates outcomes and builds confidence in its recommendations.

Reevaluate budget planning processes

The success of the AI-driven approach suggests that companies should rethink static budgeting models. Instead of monthly or quarterly “set-and-forget” budgets, organizations should adopt a more dynamic framework in which budget is treated as a flexible resource that adjusts based on performance signals. This does not mean losing control: marketers still define objectives, constraints, and total spend caps, but within that framework, AI can optimize spend distribution far more efficiently. As in Ripley’s case, the result is a higher overall return on marketing investment.

Conclusion

This A/B test validated the hypothesis that AI-driven campaign optimization can generate substantial improvements in marketing outcomes. The AI-based approach delivered stronger revenue growth (+19% lift in net revenue) while maintaining a solid ROAS, clearly outperforming the traditional approach.

Beyond performance gains, the experiment also revealed the underlying drivers, showing how AI can equalize marginal returns and react to real-time signals. The Ripley team played a critical role in making this possible. Their willingness to measure rigorously, embrace innovation, and lead the process with a learning mindset positions them as a regional benchmark in digital transformation applied to marketing. Their combination of vision and operational excellence made it possible to validate the real impact of AI and lay strong foundations for scaling its use.

For marketing leaders, the implications are both clear and promising: adopting AI in budget optimization enables teams to unlock growth opportunities that manual methods often miss—while doing so in a measurable and controlled way.

The analysis also showed that the ROAS decline was smaller than what historical behavior would have predicted for an investment increase of this magnitude. In comparable past periods, when Ripley’s team increased budgets by 30% or more without AI, ROAS dropped by as much as 41%. With the AI-driven approach, the efficiency loss was limited to just 5%, even with a 25% increase in investment.

Strategic Advantages for Marketing Leaders

For CMOs and Heads of Marketing, these findings point to clear benefits from implementing a tool like this:

Invest confidently where it truly matters

Mixilo demonstrated a strong ability to reallocate budget in real time toward the most profitable opportunities.

Gain agility

Responding quickly to market conditions—something algorithms excel at—drives better results. If CPC drops or conversion rates increase, an AI can immediately scale investment to capitalize on the moment. Manually, this is far more difficult: most teams adjust budgets weekly or monthly, potentially missing these windows of opportunity.

Grow without sacrificing efficiency

One of marketing’s biggest fears is that pursuing growth (higher spend) will destroy efficiency (ROAS). However, with intelligent optimization, it is possible to achieve significant growth with only a minimal loss in efficiency—and in some cases, even improve it.

Empower Your Marketing Team with Muttdata

At Muttdata, we drive this combination of technical rigor and marketing strategy. This experiment is a clear example of data-driven decision-making in action: an AI tool is used, but its value is validated through robust experimentation and analysis.

The learnings from this case reinforce the true role of AI in marketing: it’s not a black box to fear, but a sophisticated ally that empowers teams. In an increasingly dynamic advertising ecosystem, Mixilo, our Paid Media Optimizer delivers a competitive advantage by maximizing the ROAS of every campaign.

Now is the time to dive deeper into the effectiveness of data-driven marketing, and at Muttdata we’re excited to lead that journey alongside our clients.

Ready to get started?

Contact us to access a free demo of our Paid Media Optimizer.

.svg)