It's LLMs All The Way Down - Part 1

👉 Smaller models are getting smarter

👉 More Standardization Everywhere

👉 Business show interests in incorporating LLMs

👉 Open Source is Catching up to Speed

Disclaimer: Some of the statements made on this post are forecasts based on currently available information. They could be rendered obsolete in the future or there could be a major change on the work and experiences of these technologies.

Introduction

Recent advances in LLMs (Large Language Models), image generation, and multimodality open up the gates for all sorts of applications. With new developments popping up on a daily basis, it's not enough to know where we are; we must know where we're headed. Yes, we need to predict the future. Why attempt such a thing? Now, besides doing it because it's cool, there's also some practical tangible reason: First-mover advantage is real and powerful. Being able to take part in a niche or sector as it is being formed while the competition is low is a very desirable thing and it gives you a head start for getting better. It's enough to take a look around. Here is one example:

- HuggingFace began working with natural language processing around 2016 before pivoting to providing a variety of AI and Machine Learning solutions way before LLMs hit it big. Back then, language models were smaller and more manageable but dumber and had limited capabilities. Nowadays, HuggingFace has become a top player in the AI space and has a $4.5 billion valuation.

Of course, it’s not just about arriving early. You need the technical expertise and resources to pull it off, and obviously, a bit of luck doesn’t hurt.

Predicting the future is challenging, but we can make educated guesses by observing current trends.

Let’s begin by assessing the current landscape.

Looking Around

Big players are moving their pieces, placing their bets. That can be taken as an indicator of the direction where we are heading.

As with previous technological shifts, we see a similar process to Evolutionary Radiation in which a species evolves to fill a multitude of available niches. That’s what we see in the tremendous effort put into LLMs, both from end-user applications to AI-optimized hardware.

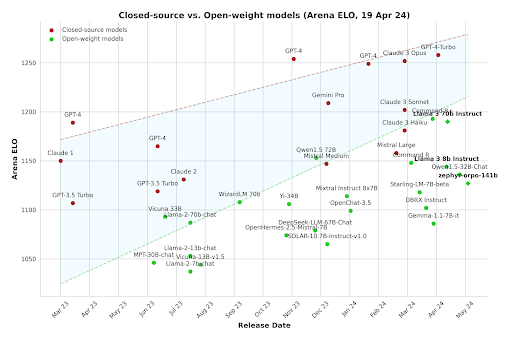

Smaller Models are Getting Smarter

In recent years, the trend has been to build ever-larger LLMs. While this continues, there's also a push to make smaller, more efficient models. Why? Because these things are hardware-intensive! This means high infrastructure costs and limits on applications like running AI on user devices, such as phones, or even locally inside web browsers.

There are a variety of tricks like: - Quantization model weights - Dynamic swapping of specialized LoRAs - Model distillation - Specialized training schedules with curated datasets - Pruning weights

...among many others actively researched to achieve this goal. Very specialized tiny models are also getting good results.

Some examples of all these efforts are (the names do not matter that much as in a very short while they will be beaten too): - LLama3 (70b parameters) is beating the original ChatGPT3.5(175b). x2.5 reduction! - SQLCoder (7b), a (relatively) small model for SQL query creation. Performance is comparable with GPT4 (~1.8 trillion parameters). x257 smaller! - Phi-3-small (7B) is beating ChatGPT3.5 on some benchmarks. x75 tinier! - Phi-3-vision (4.2B) performs comparatively well with much larger vision models like GPT-4V (~1.8t). x428 diminution!

So, we have a plethora of open models that perform well and are not that large. Fine-tuning them on a particular task can lead to superb results. Based on current results and research, there are a lot of reasons to believe that current LLMs are way below their capabilities for their respective computing. That is, given an LLM with, let’s say 20 billion parameters, future LLMs will be capable of doing much more, and less prone to errors.

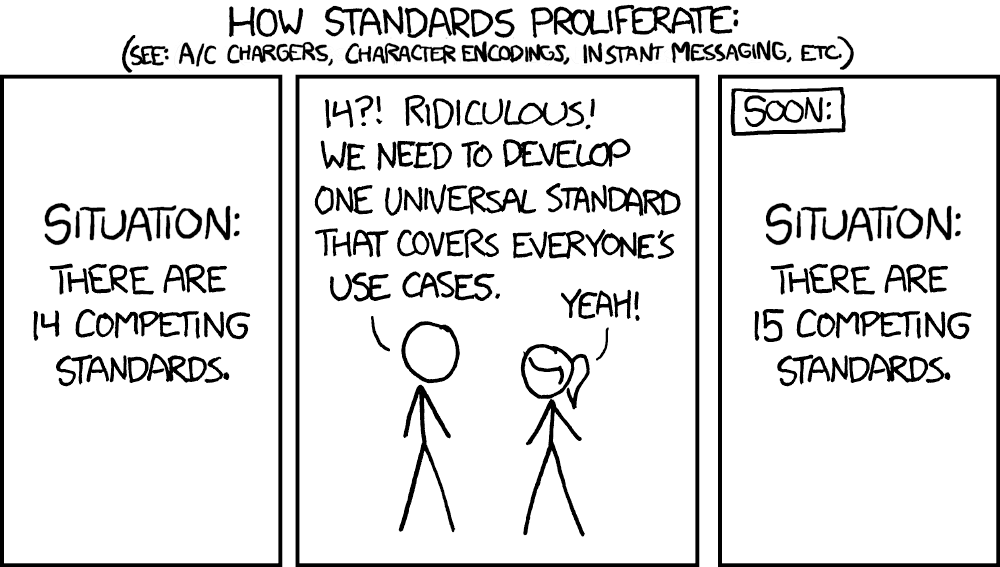

More Standardization Everywhere

The ecosystem around LLMs is maturing. Things are moving from hard proof-of-concept custom builds to standardized production-ready systems. Deploying things while remaining secure and scalable is getting easier.

Take, for instance, Amazon Bedrock, AWS’s Gen AI solution. It offers API endpoints to run inference on a set of LLMs and image generation models. It’s serverless/SaaS, meaning you do not need to worry about your homemade production setups which are both expensive and complex.

However, if you do need a more customized setup, the server direction is also getting more standardized. Exemplified by NVIDIA NIM. It’s an inference-optimized way to scale AI applications in your own infrastructure. Huggingface has recently made it easier to deploy models with NIM.

This means running both standard models and customized ones is becoming easier every day.

There are more and more large competitive models available, so whatever direction you go, you have options. Nvidia themselves have recently published Nemotron-4-340B-Instruct. A really, really big LLM.

The maturity of the LLM ecosystem is highlighted by Model Spec, which outlines the necessary functionalities, performance metrics, and safety measures for deploying large language models. This standardization enhances compatibility, accelerates innovation, and reduces time to market for AI solutions, making adoption and scaling easier for organizations.

LLMs are the new UI/UX

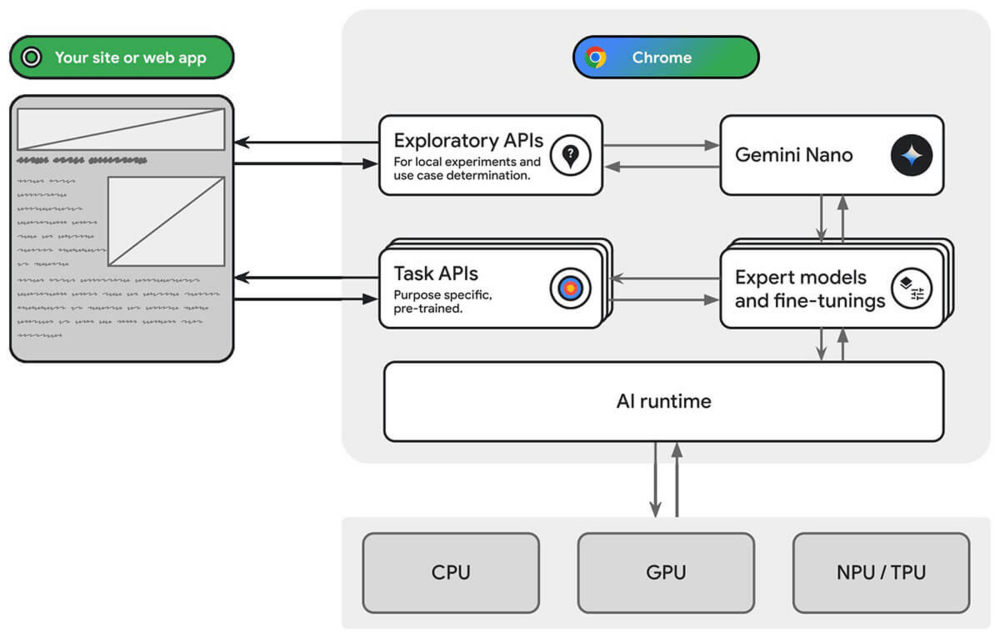

Apple has recently been working on integrating LLMs with their phones (ref1ref2). The basic premise is that the AI will be able to perform actions on your behalf based on natural language prompts from the user, hopefully fulfilling the promise of an actually useful virtual assistant.

Similar to this, Google Chrome is playing around with incorporating Gemini into your day-to-day browsing (ref1ref2).

Microsoft's Copilot+PC is a standout. Its new Recall feature continuously captures your activity, making it easy to revisit documents or websites by describing what you were doing. Phi-3-Silica, from the small models we discussed, will be integrated into Copilot+PC, showing they're already moving in this direction locally. Note that this feature was not free of criticism for security and privacy reasons.

Countless companies, alongside major players, are integrating AI into their products for enhanced UI/UX. Zoom’s AI Companion is an excellent example of leveraging LLMs for your business case. It helps with a variety of tasks across the Zoom platform. Slack and ClickUp are doing the same to attract more users and offer higher-priced tiers.

Business Show Interest in Incorporating LLMs

Beyond the UI/UX perspective, businesses are showing interest in LLMs for various reasons. While there’s no lack of hype, LLMs are addressing real pain points. These include summarizing user feedback, generating ads, intelligently sifting through large document corpuses, teaching, assisting with analytical tasks, coding, reducing customer service costs, and more.

With such a wide range of applications, a surge of companies is emerging trying to hit those pain points in different markets. Also, the more traditional companies are beginning to incorporate these tools to improve the productivity of their workers. Not to mention people themselves going out of their way to use LLMs to make their jobs easier.

- Filevine is using LLMs to help lawyers be more productive. Extracting information from documents, making drafts, etc (ref).

- AWS is as of today previewing AWS app studio which “uses natural language to build enterprise-grade applications“. In short, a low code tool using LLMs. They also offer an AI power assistant for various tasks called Amazon Q.

- GitHub Copilot, released in 2021, is now a well-established tool that helps developers code faster. It currently utilizes GPT-4 API calls.

Open Source is Catching up to Speed

Follow us on LinkedIn to catch part 2 of this blog, where we will tell you what is coming in the future.

Muttdata’s Journey to AWS Advertising & Marketing Technology Competency

👉 What is AdTech & MarTech? Why is it important?

👉 What is the difference between AdTech and MarTech?

👉 Etermax

👉 X3M

We’ve got huge news: Mutt Data is now officially an AWS Advertising and Marketing Technology Competency Partner! This competency continues our ongoing partnership journey with AWS, from AWS Select Partners, RDS specialists and AWS Advanced Partners. Now we’ve been recognized in the field of Advertising and Marketing.

Muttdata has become the only AWS Partner in the SOLA region to obtain this competency.

This competency validates our experience and successful projects in the fields of AdTech and MarTech. But what are they?

What is AdTech & MarTech? Why is it important?

We’re experts at the intersection of Technology and Data in the fields of Advertising and Marketing. Basically, we empower efficiency in your marketing actions through a combination of data, technology, Machine Learning, Deep Learning, Neural Networks and Predictive Analytics! With the power of data it’s possible to find ways to efficiently distribute your marketing spend to maximize results or find the users who are most likely to be interested in your Ad. All that and more can be automated through AI Algorithms and optimized through developing technical capabilities of an AdTech or MarTech stack.

What is the difference between AdTech and MarTech?

AdTech (Advertising Technology) focuses on tools and software used to manage, deliver, and analyze digital advertising campaigns. It includes technologies such as demand-side platforms (DSPs), supply-side platforms (SSPs), ad exchanges, ad networks, and data management platforms (DMPs). AdTech helps advertisers reach their target audience, optimize ad spend, and measure the effectiveness of their campaigns. It also includes tech related to building, running and optimizing Retail Media Networks and Ad Managers.

MarTech (Marketing Technology) encompasses tools and software used to plan, execute, and measure marketing campaigns across various channels. This includes customer relationship management (CRM) systems, email marketing platforms, social media management tools, content management systems (CMS), and marketing automation platforms. MarTech enables marketers to create personalized customer experiences, track engagement, and optimize marketing efforts.

Adtech and Martech are two sides of the same coin. Each plays a critical role in the advertising ecosystem. While Adtech encompasses technologies that help to sell ads focusing on the delivery and measurement of advertising campaigns. MarTech on the other hand, groups together technologies that aid in buying ads, managing and analyzing marketing efforts to optimize consumer engagement and sales.

We have experience carrying technical tasks like:

AdTech

✅ Research, design and development of new auction, pricing and pacing mechanisms. Via Real-Time Bidding (RTB), a Demand-Side Platform (DSP) and a Supply-Side Platform (SSP).

✅ Developing solutions for Dynamic Pricing, cost based optimization, relevance based optimization, and automated bidding.

✅ Training of Machine Learning models for predicting Win Rate, Cost, CTR, CVR, and CVR_VTA. Implementing multi armed bandit models for creative optimization.

✅ Building performance based pacing and price modulation.

✅ Event streaming architectures combining Kafka and Druid/Pinot or Kinesis with S3.

✅ Efficient Data Lakes combining AWS EMR with Presto/Trino, AWS Athena, AWS Glue, and/or Delta Lake format.

✅ Predicting Ad Network CPM and latency at the user level to optimize Mobile Ad Waterfalls.

✅ MLOps capabilities:

✅ Real-time Feature Store

✅ Automated model variant generation, versioning, backtesting, and monitoring.

MarTech

✅ Creating lift based Multi Touch Attribution system based on Deep Learning models.

✅ Combining data-driven MTA, with Marketing Mix Modeling and Lift Tests for measuring true marketing incremental lift and saturation.

✅ Generating data-driven accurate marketing spend plan scenarios through the combination of MMM and demand forecasting.

✅ Developing predictive LTV models for understanding true campaign ROI for user acquisition.

✅ Understanding campaign and Adset saturation and optimizing bids and budgets to maximize overall results.

✅ Using Generative AI to generate creatives and copies at scale for large ecommerces.

✅ Optimizing product feeds and user segmentation.

✅ Predicting user propensity and product recommendation.

✅ Applying Generative AI and Mult Armed Bandit approaches to generate optimal messaging content and delivery for Own Media.

Our Trajectory

Mercado Libre

MercadoLibre is the largest online commerce and payments ecosystem in Latin America. Through a suite of technology solutions including Mercado Pago, Mercado Ads, Mercado Envios ,and Mercado Crédito they enable customers in 18 countries to carry out their commerce offering solutions across the entire value chain. We’ve had multiple fruitful collaborations with Mercado Libre:

- Lift Based Multi-Touch Attribution: Online attribution systems typically try to measure the contribution of each advertisement touchpoint (usually ad clicks) in conversion journeys. This means trying to accurately measure the incremental lift brought by each touchpoint. Our teams collaborated to design and implement a new multi-touch attribution platform using advanced deep-learning models for understanding true click-level impact. The result: a real-time and campaign-level measurement much closer to the real lift estimations of each channel.

- Internal Bidding System: the Product Ads Team (searched items promoted by Mercadolibre sellers) needed to develop an improved internal bidding system. We’ve developed a new system running bidding in real time, as the search was made. The system performs CTR (Click Through Rate) and CVR (Conversion Rate) estimations on each item to determine their position while estimating the impact on the marketplace’s GMV (Gross Merchandise Volume) and deciding the optimum tradeoff. The result: a 25% increase in clicks without affecting organic GMV.

Etermax

Etermax is one of the largest gaming companies in the world, especially known for its trivia games headed by the global hit Trivia Crack. Muttdata collaborated on improving the performance of their Demand Side Platform against the most established mobile Demand Side Platforms. The result? After just four months of work, with no modifications to the previously used budget, Etermax’s system showed a 40% Decrease in Cost Per Clicks (CPCs)

X3M

X3M emerges as a fresh and dynamic AdTech enterprise with strong industry support. Their primary goal is to establish a publisher-centric approach in an industry predominantly focused on advertisers. Their comprehensive range of services encompasses a fully managed AdOps service, a mediator, an exchange, and various other offerings. X3M's mediator encompasses a key aspect of their services: optimizing waterfalls. This presents a unique challenge as publishers possess less data compared to advertisers and must navigate multiple advertising platforms with varying behaviors, all while contending with the ever-shifting landscape of buying mechanisms. They contacted Mutt Data In order to bring a fresh approach leveraging AI and Machine Learning to achieve the next significant breakthrough. The result? An innovative real-time waterfall optimization algorithm driven by state-of-the-art AI systems that led to a 20% increase in CPMs.

What comes next?

Obtaining the AWS AdTech&MarTech Competency is a huge milestone for us, recognizing our successful projects in this field. Together with AWS, we’re working hard to deliver the best solutions in the world of AdTech & MarTech (keep your eyes open for future success cases!). And who knows, maybe even a new competency 😉.

If you’re interested in our data or AdTech&MarTech solutions, you can contact us here.

Why Data4Good is Good for All

Making Data available for everyone

From online shopping to targeted advertising, weather forecasting to personal photo libraries, our world revolves around data. Companies analyze this information daily to optimize profits. But what happens when that data is not available to those who need it the most?

In the universe of non-governmental organizations (NGO’s) focused on social inclusion and development, the use of data could signify a real improvement for people’s quality of life. However, accessing and using these data is often a huge hurdle given their lack of resources and technology is often a difficult hurdle to overcome.

This is where Mutt Data comes in: with our experience in data analysis we've helped countless companies across industries to implement modern AI solutions. We don’t just see data, we see possibilities for change and improvement. This is why we launched Mutt4Good. An initiative where technology and empathy align to support the organizations that really need it. From animal shelters to NGO’s fighting for children’s rights, Mutt Data is becoming an ally to those who are working to make the world a better place.

Exciting initiatives

While these initiatives are not without challenges such as noisy data, changing priorities, and diverse ways of working, to name a few, we are very excited to work alongside passionate people who work tirelessly to make a difference. We’ve learned to adapt to different work methodologies, become more flexible and learned to embrace the diversity of approaches and points of view.

At Mutt Data, our culture is defined by self reliance and continuous learning. We’ve found this to be fundamental in the development of disruptive projects for companies, as well as NGOs. From the creation of a satellite image bank to analyze communities to the implementation of Machine Learning models to attract economic donors more efficiently, Mutt Data has shown that data can be a very powerful tool in the right hands.

As Mutters, we are proud to actively participate in and promote positive change. By collaborating closely with NGOs, we are witnessing first-hand the direct impact our actions have on their initiatives. The feedback we’ve received from our partners has been overwhelmingly positive and encouraging. We are beyond grateful and humbled for the opportunity to partner with these organizations.

Our Objective

Mutt4Good’s objective is straightforward: we want to make data, and the opportunities it enables, available to those who need it the most. We are transforming data into powerful tools to enforce much-needed social change. We aim to provide them with the necessary lenses so that NGOs can glimpse a world of possibilities, where every piece of data becomes an opportunity for change and hope. Because in this journey towards a fairer and more compassionate future, every small step matters, and we are here to guide the way.

This will be the first in a series of posts where we explore the initiatives Mutt is taking to use data to make the world better!

How our Annual Mutters’ Trip Transforms Our Daily Work

It's a bit of an understatement to say it’s very cool to see your team grow, take on new projects, delight new customers, and train new colleagues. To me, that growth is never as obvious as it is when we get together for our Annual Mutter's Trip (AMT)! 💥

Our annual company event has become, among other things, a great moment to take stock, a yearly snapshot of where we are and how we got there.

Our team is the reason we can take on bigger projects and continue helping companies transform their business with data and machine learning. Our team is the reason we're excited to start work in the morning and why we can maintain a positive attitude when facing challenges. Our remote team is also spread out, which means we are very mindful about the events and initiatives that we organize to bring the team together.

One of the challenges of working in the People team at a growing company is how to keep the culture and values alive and top of mind. We believe these values are one of the things that make our team such a unique team to work with.

Our AMT is one of the Mutt Data traditions that plays a key role in fostering our values. In this post we want to share a little bit about our latest adventure, and how we planned the trip and activities to connect and continue fostering our unique culture.

Make it Memorable 💯

We start planning early. The AMT took over 5 months of preparation and involvement from +20 providers to ensure we can deliver a memorable experience. The planning, budgeting and organization is taken seriously and followed up closely like any other critical company project. Because it is.

We want it to be bigger than a typical company party or corporate retreat. We carefully plan the leadership presentations, meme-competition, team activities and even souvenirs.

Every mutter got a very unique t-shirt with our favorite Mutt and a wink to Mar del Plata's famous sea lions. 🦭

Everybody is Invited 🙋

The more the team grows and expands to new locations, the more we have to consider in our planning! But working remotely, we feel it's important to have this opportunity for some face-to-face time with everybody. Every year it gets a little more challenging, but we love a good challenge!

Setting the Tone

After the welcome lunch, our CEO Juan Pampliega and leadership spoke about our successes, challenges ahead and how we are charting our course for the future. While we always favor open-team communication and host regular all hands, we use this unique opportunity to have a big-picture conversation.

Different Activities 🤹

We wrap up the first day with a visit to a local brewery, kicking off more informal quality time with the team.

When planning the activities, we looked for things the team had maybe not done before and could learn from, activities that could let us learn a little about the local place, and that would also allow for some chill time for the team to chat.

We started day 2 with a trek along the cliffs with a local tour team that shared insights into local ecology and environmental preservation.

Hungry from the hike we shared a delicious lunch with camarones and spent a few hours playing football, volleyball and tejos on the beach. Nothing like a bit of friendly competition to get the team together!

Some decided to keep the competition going and headed to the go-kart track, while others opted for chill time back at the hotel.

We wrapped up the day with some dancing and drinks 💃🍹

The last day kicked off with an energizing breakfast and presentation before we headed into town for the obligatory tourist snapshots, one last team lunch and souvenirs.

We believe it's important to combine planned activities with downtime where the team can simply talk and get to know each other better. Much like our beloved donut-coffee breaks during the week, we believe random conversations during downtime can go a long way in helping us connect!

There's a lot more I can share about the trip, but I hope this snapshot is enough to show a little bit about the awesome culture and team we are building. I'm super excited to add this snapshot to our album. Another postcard of our journey! 📸

If you like what you've read and think you'd like to be a part of our team, good news! We're always looking for awesome people to join!

Unlocking Data Insights with Generative AI

Unlocking Data Insights with Generative AI

New tools are coming left and right. As you probably know, AI is the new buzzword in town. New opportunities for using AI in new areas are constantly popping up. Here is one of them. \ It’s fairly common to want to create dashboards with plots, statistics and everything you need in order to understand your data. You know: “How much are we spending on X?”, “How does our sales seasonal behavior look like?”, that sort of thing. The consumers of these dashboards usually include non-technical people like managers. They just want to know how the business is doing. There’s a disconnect between the producers of these dashboards (technical people) and the consumers (non-technical people). With LLMs we can bridge the gap. Let managers simply ask questions and get their plots, their metrics, or even new interesting questions to explore.

That’s the main gist of it. It sounds great but it’s far from perfect. A common error with all the AI buzzwords is not having a proper pipeline so that you can leverage it.

You need to sort your data

Wanting to do AI is alright but it's not that straightforward. Having your data ready for consumption is the most important thing. The options we present below need that. There’s no cutting-corners. Do you even have the data? Where is it? Is it in multiple databases? Is it used for operational/transactional purposes? \ All these and more are prerequisites before you can think about adding AI to your analytics. This is no easy task. You need data engineers to set up pipelines to integrate your sources and do the pertinent transformations. That’s where the modern data stack comes in.

The modern data stack

While every business is unique there are repeating patterns that don't actually change that much. Companies usually use data for two reasons.

- First, the transactional data that is used for operational purposes. That is, to actually handle the business (storing your images, saving your users login information, orders, that sort of stuff).

- Then to leverage data to improve or get a better understanding of the business. Your data visualization and machine learning algorithms go here.

However, it's generally not a good idea for both of these use-cases to be using the same data directly. Large scale analytics may throttle the performance of your transactions operations. The modern data stack consists of the usual collection of resources and processes you need to handle your business data in order to use it for analysis. It’s the infrastructure you need, your data warehouses, transformations, your visualizations, and your workflow.

We have our own version of the modern data stack. We’ve already talked about it ([1], [2]). But how does our AI assisted visualization fit into the data stack?

You should usually make your OLAP ingest data from your primary sources and do some processing. Whether to do it every x time or continuously depends on the application. For our purposes, daily should be enough. It’s a good idea to copy some premade patterns for this. Once we have the processed data it is simply a matter of directing it to the adequate source. All these data pipelining and finding the adequate transformation is the hard part

Ok, we have our data pipeline ready, now to our actual objective: doing BI with AI

Different options out there

We’ve checked a couple of options for doing this. You’d still need to explore your particular use case. We can split them into 3 main categories. All alternatives are still new so they are not yet mature options.

- SaaS. Using canned premade options out there, They tend to be easier to use and you don't have to worry about its internal behavior. The price varies but,, generally it’s not cheap

- API calls: Leveraging calls to third-party LLMs to do it on your own

- Fully local: Have everything running in your own infrastructure

We exemplify each category in the following sections and go more in depth into their advantages and disadvantages.

AWS Q in QuickSight

AWS has recently launched a new service called AWS Q, it uses LLM magic to help people with their AWS questions. It’s still in preview so it's pretty new. They also have AWS Q in QuickSight which allows users to do this AI+BI thing. You make questions in natural language and get a result. The cool thing about it is that you don’t need to worry much about how to make it work. It’s a plug and play sort-of feature. Supports multiple types of sources both from AWS (like an S3 or Redshift) or your own local databases,

The AI answers might still be incorrect though. You need to be careful and can verify answers. Plus, you may have to manually give descriptive names to columns and synonyms to make the LLM job easier/more accurate. Now, speaking of price, it’s not particularly cheap but this may change a lot over time. They have different pricing plans but, making some assumptions, according to this it would cost around

34 per author per month + $0.33 per reader session

Authors are the ones that do the configuration (connect the data, do the descriptive names and so on). Readers are your managers and such. It can clearly bloat out of proportions if your organization has a lot of readers.

The best thing about this option, I think, is that it's easy to set and use. You don't care how it works. It’s accessible.

Use a LLM API

LLMs models are everywhere now. ChatGPT, Cohere, Gemini for instance. You could try to “do it yourself” and talk to one LLM using their API. This has some advantages over Quicksight.,

- It’s cloud agnostic.

- You could easily change the underlying LLM API call and it wouldn’t change a thing.

However, the tools to do your BI using the LLM are yet not that mature. Performing the queries, constructing dashboards and such is no trivial task. This may change with time though. But as of today, there’s no obvious clear path on how to do it.

Another issue is data privacy, although you don’t need to show your data to the LLM, the LLM would likely need (at least) to know your data schemas. How could it perform queries otherwise?

In terms of cost, it depends on the LLM provider but it’s pretty cheap! You’ll likely need an instance but the heavy lifting is performed using the API so you can grab a low-tier one and you should be fine. Just to have a rough estimate ,as of March 2025, grabbing a cheap AWS instance we get

0.0208 USD per hour per instance + Query cost

Estimating the query cost is harder, How many tokens are you using? How many calls? What model? This would probably need to be estimated for the specific use case.

The scalability of this approach is limited by the API we are using. You may hit rate limits if used massively.

A local LLM

Similar to the API approach, you can drop the API call and host the LLM yourself. Most considerations from the previous section still apply with some exceptions. First, all data never leaves your organization. The big advantage of locally hosting your LLM is that you can

- Grab the latest cutting edge open model available

- fully customize it for your organization with your organization data in a private way. This can improve the quality of your generations

On the other hand, it's not at all clear how to do it. And it definitely ain’t cheap. Good LLMs need beefy GPUs to support them. Just to give an example, using a GPU is 16 gb of VRAM (on the_low end_when it comes running LLMs)

0.7520 USD per hour per instance

In terms of scalability, it can become really expensive if aimed at a massive number of users. You would need a lot of GPUs. Managing autoscaling is still cumbersome to get right. It needs a lot of effort and time so do it, and thus money. Having said that, provided that it's only used by a few people this is not an issue.

The previous discussion also assumes that you have a dedicated instance, there are some services that provide serverless GPU options but that’s another can of worms. And cold starts carry latency problems so it's probably not a good alternative.

It also seems, according to some people working on this, that finetuning is generally not needed. Doing few shots is enough so part of the benefits of customization are diluted

Wrapping up

We explored a couple alternatives for mixing up business intelligence with AI. We went through costs, scalability issues and how to connect them to your data stack. These solutions are still fairly new. In time, they’ll mature into actually trustworthy alternatives that will help managers understand their data. Connecting them to your organization will (hopefully) make the whole experience easier for managers.

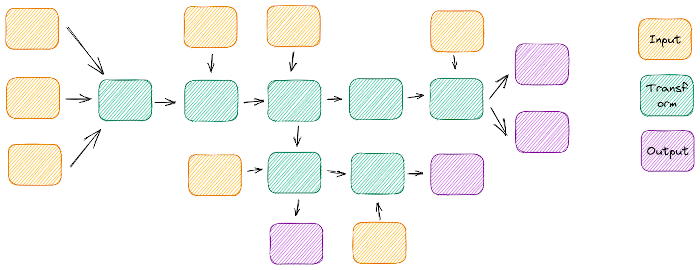

When and How to Refactor Data Products

Introduction

Data Products are typically evolved rather than designed: they are the result of exploratory experimentation by Data Scientists that eventually leads to a breakthrough. Much like with evolved organisms, this process produces vestigial structures, redundancies, inefficiencies and weird looking appendages.

While this organic growth is the natural output of the exploratory phase, the selection pressures acting on your Data Product suddenly change when it’s deployed into a production environment. This shift in environment must be accompanied with a redesign to withstand large volumes of data, or it will inevitably collapse.

In this article, we explore when (and if) refactors are necessary and which are the best strategies to approach them.

Symptoms

Embarking in a sizable refactor can be a daunting task (or even a fool’s errand), so it’s not recommended unless it’s absolutely necessary. The first thing you need to do is to evaluate the symptoms that your system is experiencing and take stock of your tech debt.

The usual symptoms a Data Product experiences are:

- Lack of visibility into the process

- Difficulty in implementing new features

- Slow experiment iteration rate

- Multiple and disjointed data sources

- Multiple unrecoverable failure points, which produce dirty writes

- Unpredictable, untrustworthy and non-replicable results

- Slow data processing

- Low visibility of the data as it goes through the pipeline

Some of these symptoms by themselves have relatively easy fixes, but having several of them simultaneously points to a larger problem with the design: accidental complexity.

Accidental Complexity is a concept coined by Frederick P. Brooks, Jr.1, which refers to the unnecessary complexity a piece of software accrues that does not help to solve the problem at hand.

"From the complexity comes the difficulty of communication among team members, which leads to product flaws, cost overruns, schedule delays. Fromthecomplexitycomesthedifficultyofenumerating,muchlessunderstanding,allthepossiblestatesoftheprogram,andfromthatcomestheunreliability.Fromthecomplexityofthefunctionscomesthedifficultyofinvokingthosefunctions,whichmakesprogramshardtouse.Fromcomplexityofstructurecomesthedifficultyofextendingprogramstonewfunctionswithoutcreatingsideeffects."

This accidental complexity is the cause of an all-too-familiar feeling to programmers: the inability to reason and communicate about a system. It impedes the mental representation needed to conceptualize distributed systems and leads to fixation on smaller, easier to grasp problems, such as implementation details.

"Not only technical problems but management problems as well come from the complexity. This complexity makes overview hard, thus impeding conceptual integrity. It makes it hard to find and control all the loose ends. It creates the tremendous learning and understanding burden that makes personnel turnover a disaster."

This also creates a vicious cycle: because the software is difficult to understand, changes are layered on rather than integrated into, which further increases complexity. A refactor is needed to eliminate accidental complexity and should redesign the system in such a way as to minimize complexity creep in the future.

Strategy

Refactoring a piece of software that’s being actively maintained can be tricky, so we recommend the following strategy.

Understanding

The first step is to perform a materialist analysis on the system:

What does it purport to do versus what does it actually do?

This question is deceivingly difficult to answer, given all that we talked about accidental complexity, but following it through will make plain the core contradictions within our system, from which all other symptoms originate.

To start to unravel this problem, we need to look into the inputs and outputs of our system and work from there. It’s a tedious but useful exercise, that will reveal the paths data takes through our system, where they cross, tangle and which ones never meet.

Multiple and disjointed inputs and outputs tend to signify accidental complexity

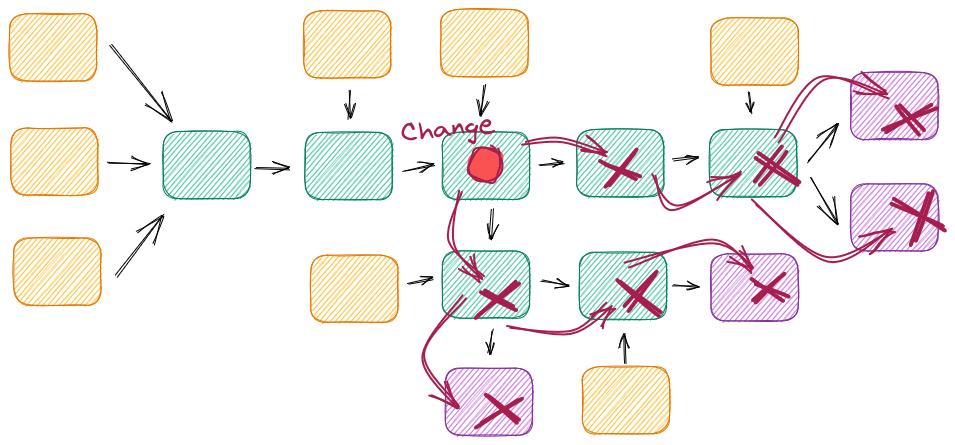

Another good way to understand the ins and outs of the system and its pain points is to try to implement a small change into it and wrestle with the ripple effects: is the change limited to a single function or class or does it have multiple effects downstream? This is especially useful if you’re not familiar with the project.

Without proper interfaces, a simple change can have cascading effects

Incremental Improvements

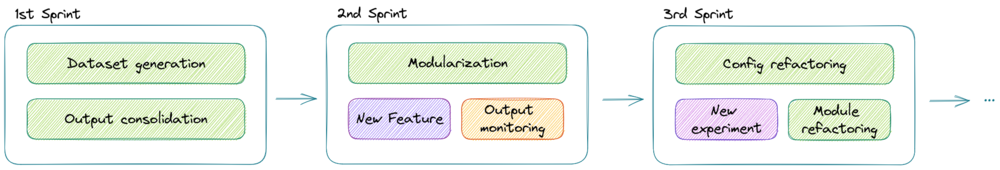

We have to keep in mind that this is a productive system that still needs to be maintained, so we can’t simply start the refactor from scratch in a different repository and let the previous version rot away while we take a few months to finish the new one. As such, we need to strategically pick what parts to refactor first, in incremental iterations and keeping as much of the original code as we can salvage.

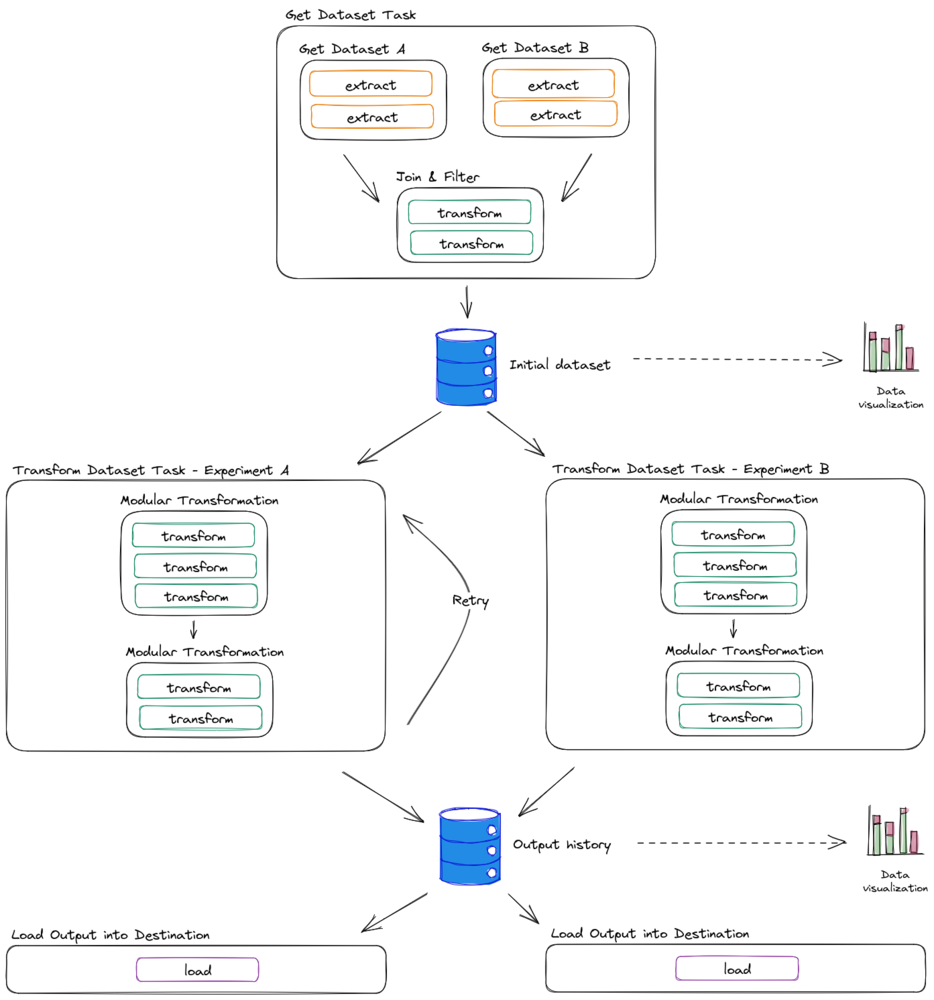

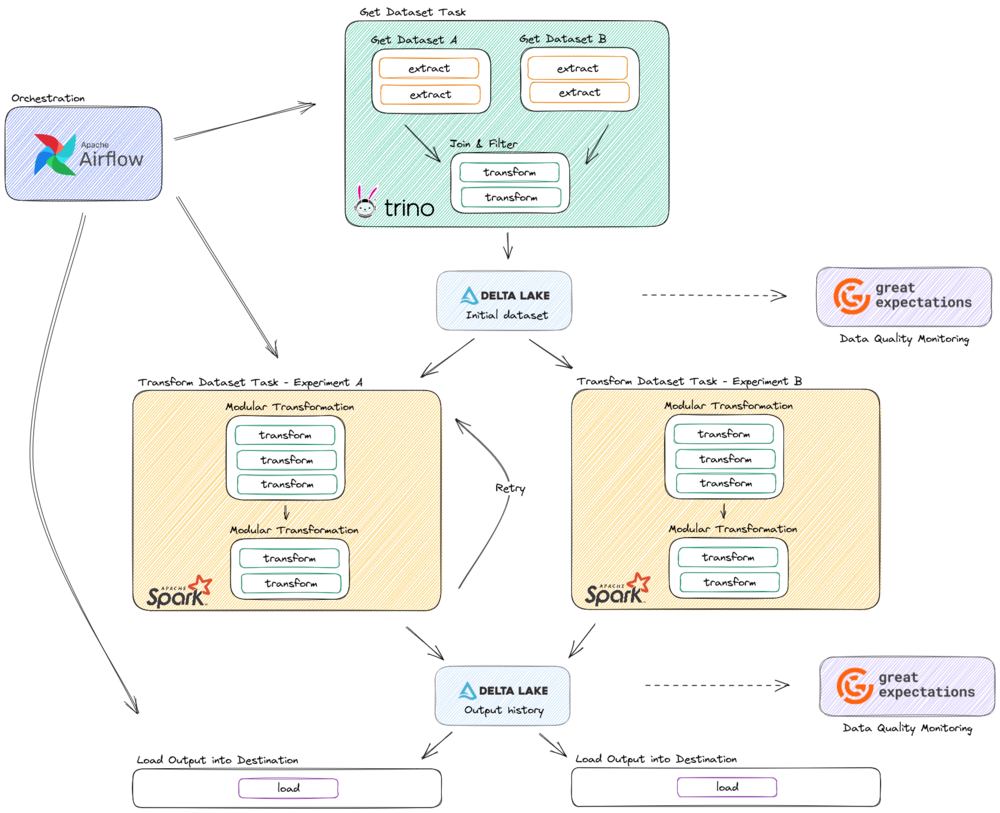

A good place to start is to consolidate all inputs into a “build dataset” module, and all the different outputs into one at the end. This will naturally provide starting and ending points for the flow of data, even though there’ll still be much plumbing to be done in the middle.

Well defined, incremental refactoring allows for new development in between improvements

Solutions

Now that we have a good understanding of the system and an incremental strategy to follow, we can start to implement our solutions.

Untangle

There’s a tendency in data products to have modules and functions with multiple responsibilities, stemming from the fact that exploratory code evolves adding modules where they are needed, but without putting emphasis on an overall design.

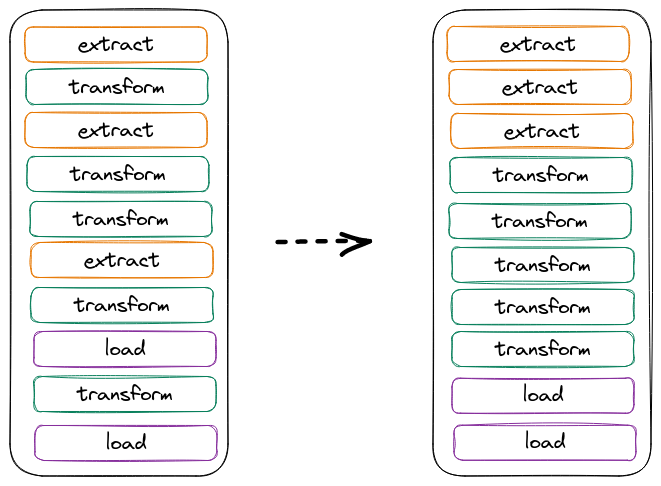

It’s useful, at this stage, to think about the three basic operations in Data Engineering: Extract, Transform, Load. The first step in untangling highly coupled modules is to break them down into (and group them by) these three basic operations.

Simply reorganizing the order of operations can enhance understanding

We can approach this process with a divide and conquer strategy: start at the function and class level and slowly work our way through to reorganize the entire design in this manner. As we progress, we’ll start to see opportunities to remove duplicated or pointless work.

Modularize

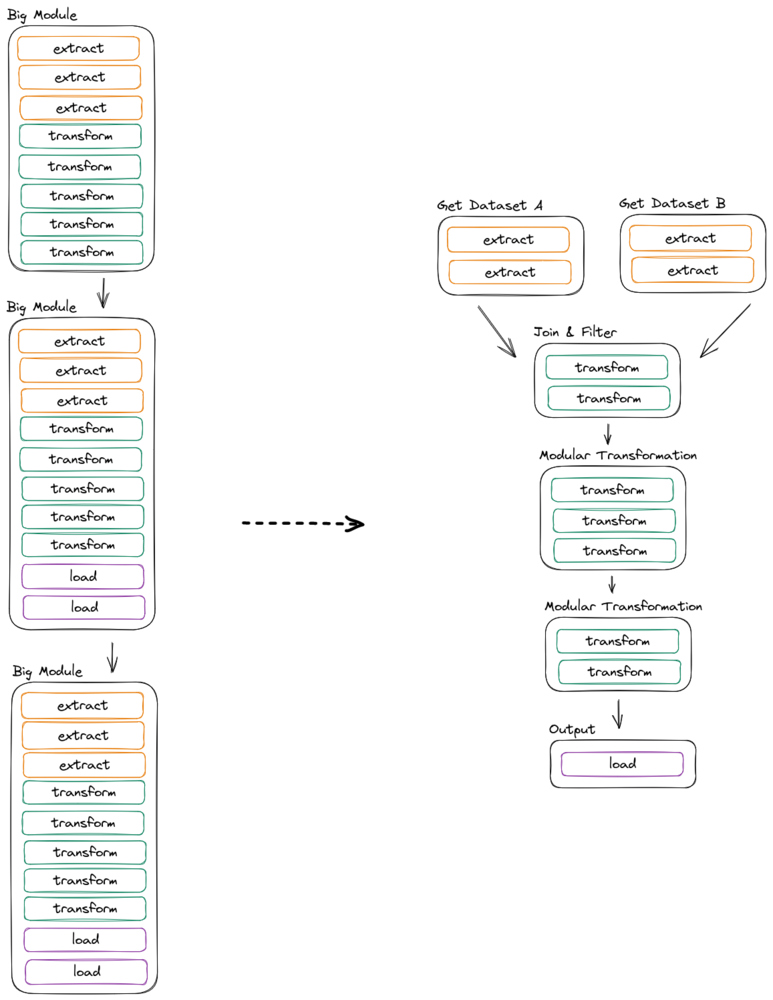

Now that our code is more organized, we can begin to conceptually separate it into modules. Modules should follow the single responsibility principle and be as clear and as concise as possible regarding the work they perform, which will allow us to reutilize them when scaling.

Data products usually grow piece by piece by the demands of the business. This leads to modules being closely linked to business logic, making them too specific or have too many responsibilities. Either way, this renders them hard to reutilize and, consequently, increases accidental complexity and reduces scalability.

With this in mind, we should feel free to reorganize the existing modules into new, cleaner ones, freeing ourselves from the existing artificial restrictions in the process. We’ll likely end up with smaller classes and functions than before, and they will be more reusable.

Having modules does not necessarily a good modularization make

The process of modularisation will also enhance, through abstraction, the way we think about our code. We can now rearrange whole modules without risk and it’s even likely that we’ll find out that previously tightly coupled processes can be completely separated into independent tasks.

Design Interfaces

Once we have every module conceptually separated, we can start planning how to separate the pipeline into orchestrated smaller tasks. To achieve this, we need to define interfaces for each task and strictly follow them.

In data pipelines we like to think of the data itself as the interface between tasks, both following the strict structure and constraints we impose into that data. With this in mind, we usually define intermediary tables as interfaces between tasks and structured dataframes as interfaces between modules of the task.

Independent tasks need to persist data somewhere

There are several benefits of having intermediary tables as strong interfaces:

- They enforce a schema between modules.

- They provide checkpoints in between processing, in case there’s the need to retry.

- They provide visibility on the pipeline and can be used to monitor data quality

- They reduce resource consumption by reutilizing built datasets

- They provide a dataset and output history to reproduce experiments or train new ones.

- Tasks can be plug-and-play if they adhere to the interface

Having tables as interfaces unlocks a lot of potential in the design

Optimize

Optimization in Big Data systems rarely comes from getting the most performance out of any given line of code but rather from avoiding duplicate work, choosing the right tools for each task and minimizing data transfer over the network.

This can only be fully achieved after applying the previous solutions in this list, as the modularity and understanding will allow us to rearrange the flow of data to our advantage and having tables as interfaces will allow us to have an array of tools to choose from to interact with the data.

Example of a refactored design showcasing different technologies for each task

Conclusions

Refactoring a Data Product is not an easy task, but can be greatly rewarding when done correctly. Ideally, however, these design considerations should be taken into account before deploying the Data Science MVP into production, as they are easier to implement before that stage.

Footnotes

- Frederick P. Brooks, Jr, "No Silver Bullet --Esscence and Accident in Software Engineering" ↩

Unraveling the Marketing Maze

There is so much content around Marketing Mix Modeling (MMM), that it took us a minute to consider how best to contribute to the conversation. In this first post, we’ll focus on the problems marketers are facing and which of these challenges MMM can help with.

A snapshot of today’s marketing landscape 📸

Drowning in Data

In today's hyper-competitive landscape, marketers face challenges on multiple fronts. With an ever-increasing number of channels and touchpoints, it's becoming increasingly difficult to understand what's truly driving sales and revenue. Having more data doesn’t instantly translate to easier data-driven decisions. Raise your hand if you can relate 🙋

Starving for Insights 📈

"According to HubSpot’s survey of marketing professionals, more than nine out of ten marketers leverage more than one marketing channel — and 81% leverage more than three channels."1 With so many channels in play, it's no wonder marketers are struggling to make sense of the data deluge.

But it's not just about the sheer volume of data; it's about extracting meaningful insights from it. Many marketers today find themselves data-rich but insight-poor. This is where MMM shines, helping marketers pinpoint the true drivers of success.

Ads have both short & long-term effects but the latter can be hard to track 📈

While immediate metrics such as click-through rates and conversion rates offer valuable insights into the initial response to an ad, they often fail to capture the enduring effects that ads can have over time. Ads possess both short and long-term effects, influencing brand perception, customer loyalty, and overall brand equity in the long run. However, the inability to measure these long-term effects effectively can lead marketers to overlook the true value and potential of their advertising campaigns.

Marketing is multichannel but measurement metrics are not 📺📱

While the trend has been to shift more spend towards digital channels, offline channels are still very relevant. Unfortunately, a lot of marketers struggle to integrate and compare performance data from different offline and online channels to inform planning and forecasting. Without a way to compare the impact of their different channels and campaigns, it’s challenging to identify the optimal spend for each one and avoid overspending past the point of saturation.

The Budgeting Bottleneck: Justifying Every Dollar 💸

It’s no secret that there is more and more scrutiny over marketing spend, CMOs are under immense pressure to demonstrate ROAS and to identify where spending their advertising dollars will have the most impact on their business goals. “According to Modern's report, driving revenue and short term growth is at the top of the priority list for CMOs in 2024. Nearly 75% of surveyed CMOs cited short-term company commercial growth as their most pressing priority for the next 12-18 months, above longer-term goals. This focus on immediate commercial impact indicates CMOs feel increased pressure from CEOs and boards to demonstrate ROI through metrics like monthly recurring revenue and quarterly sales results.” 2

Understandably, with this increasing pressure to make sense of the diverse data at their disposal, marketers are exploring Martech solutions. Modern’s report also indicated that “37% of CMOs are looking to increase the role of MarTech by expanding stacks, improving capabilities, and closing the skills gap”. 2

One of the Martech solutions that can help marketers address these issues is MMM.

[Refresher] Marketing Mix Modeling is a statistical technique used to analyze and quantify the impact of various marketing activities on sales or other desired outcomes.

So… how does MMM help marketers navigate these modern marketing challenges?

Imagine you're running multiple marketing campaigns simultaneously, such as TV ads, social media promotions, email campaigns, and in-store displays. Marketing Mix Modeling helps you understand how each of these different marketing tactics contributes to your overall sales or revenue.

It can help you answer questions such as:

- What is the impact of each channel on a specific KPI such as sales?

- What are the long-term brand effects of the ads?

- Are you over or underspending on any campaigns?

- What is the most efficient way to allocate your marketing budget?

Marketing Mix Modeling vs other measurement models

As if channel fragmentation and increased pressure on budgets weren’t enough, marketers face one more hurdle in their efforts to efficiently allocate and track their budgets: biased attribution.

As marketers grapple with an increasingly complex customer journey, traditional attribution models often fall short. Most marketers believe their attribution data is inaccurate or incomplete, but struggle to establish a single, reliable source of truth.

Most measurement solutions leave marketers struggling with:

- Bottom of the funnel bias: Last-click or rules-based attribution usually over-credit channels closer to the bottom of the funnel such as Paid Search and under-credits top-of-funnel like TV.

- Privacy regulations restrict user-level attribution: With Apple and Google making changes to protect user privacy, such as the App Tracking Transparency (ATT) protocol enforced with iOS14 and the deprecation of cookies, traditional user-level attribution on online channels is limited.

- Siloed solutions: Most models don’t allow marketers to compare the performance of their online and offline marketing channels within a single unified view. This lack of an omnichannel measurement solution prevents marketers from gaining a comprehensive understanding of their overall marketing effectiveness across all touchpoints.

We’re not saying that running MMM will magically solve all your marketing problems, but… it will help you sidestep these limitations. Marketing Mix Modelling considers all touchpoints, both online and offline, and since it is not user-based it is not affected by privacy regulations.

Furthermore, combining MMM with data-based attribution will allow for a holistic view of marketing's contribution and helps resolve the attribution challenge.

TL,DR: By unraveling the complexities of the marketing mix, MMM empowers data-driven decision-making, budget optimization, and a clearer understanding of what truly drives business growth.

Coming soon

In upcoming posts, we’ll cover some of the most frequent questions we receive when chatting with marketers such as:

- Does MMM work for every business? What types of businesses or industries benefit the most from Marketing Mix Modelling?

- What do advertisers need in order to implement MMM in their marketing stack?

- What does the MMM output look like? How can marketers leverage these insights?

And many more. As data nerds, we’ll do our best to answer straightforwardly and without the frills, so stay tuned and if you have any questions for us, don’t hesitate to reach out!

- Hubspot, “Top Marketing Channels for 2024 +Data”. ↩

- The CMO Club, “The Top Priorities And Challenges Of CMOs In 2024: Report”. ↩↩

Generating Usable Images with AI

Introduction

As we mentioned in our previous post, GenAI is unreliable. On top of relying on business rules to tame this issue, we can also attempt to improve the generation process in some sense.

In this post we introduce a couple of techniques and tricks to improve/detect problems, specifically for the case of image generation.

From a business perspective, making generative AI more reliable, as already mentioned, allows us to take different routes: opt-out instead of opt-in, less human involvement, etc. Moreover, it unlocks some applications that would not be possible under more unreliable models, such as automation.

To begin with our image generation process review, let’s start with the simplest setup: a machine runs a model and generates images.

Given this simple setup, our options are quite limited. We can either enhance the reliability of the model or we can select and tune the already generated images. In the following sections we present some approaches that address both of these aspects.

Caveat: The future is coming fast

Before presenting these tricks, we need to address the elephant in the room. The future is coming… and it’s coming fast! There is a prevailing trend that bigger and better models are emerging left and right. Evidence indicates that larger models can overcome the problems and limitations previously found in smaller models.

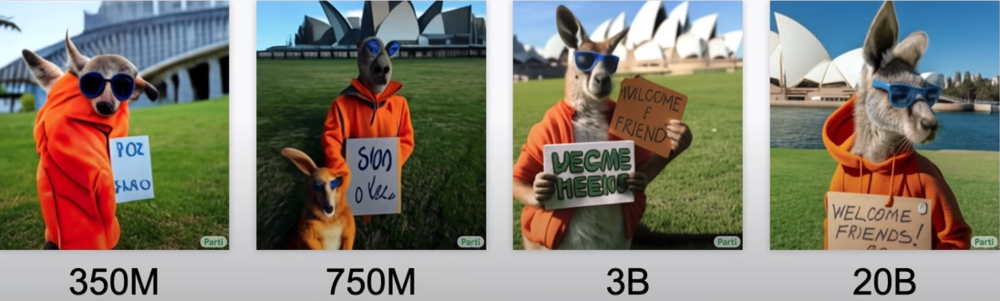

Comparison of results from models with different numbers of parameters.

Therefore, with the landscape constantly changing, keep this in mind: besides the tips shared later, the key is to think creatively, stay updated and bring value to the next significant model release.

Tip 1: Use fine tuned models instead of vanilla ones

If you want to create images for a specific purpose, it's usually better to use a model that has been fine-tuned for a similar task rather than the original model.

There are various pre-trained models available online for different purposes like portraits or realism. It's a good idea to start with one of these if your task is closely related to those areas, it will likely provide higher-quality results.

If you have the resources, you can also fine-tune one yourself. What’s better than a model tailored to your needs? There are plenty of resources on how to do this, for example, this training document from hugging face or this beginners guide from OctoML.

Tip 2: Use LoRAs for Fine-Tuning When Datasets are Small

We have established that fine-tuning is an effective alternative when it comes to generating images for a specific problem. But what if your dataset is really small? Don't fret, you can use Low-Rank Adaptation.

It's cheaper than fully-fledged fine-tuning, takes less time, and can be done with very small datasets (≈ 10-200 range).

It's also great for 'teaching' specific concepts to models, guiding the generation into narrower, more predictable scope. If you're not sure where to start, Hugging Face has a great guide with scripts to train your LoRA so that you can plug-and-play.

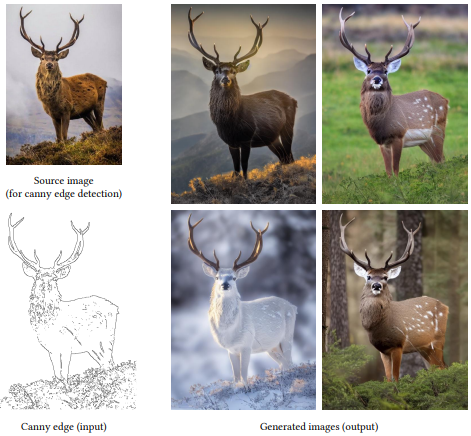

Tip 3: Use Text-To-Image Models Trained For Specific Tasks To Control Your Outputs

What do we mean by controlling your outputs? Well let’s bring it down to a business example, say you need to design an ad where a logo or item needs to be in a specific spot or you need a character to be in a specific pose. Essentially, an image that adheres to a specific structure or composition.

There are great text-to-image models that give you control over these variables and they’re trained for different specific tasks (you can even train your own, but… you need super large datasets).

Some models to look into: ControlNet, T2I Adapter, and Gligen.

Note that, while these tricks give you control over the output, they might reduce the quality of the results, in other words, images may look bad. (again depending on the underlying text to image model among other factors).

Tip 4: Consider What We Focus On In An Image

While not strictly used to improve generated images, saliency maps provide a powerful tool for assessing the attention placed on different elements within an image. This proves to be beneficial in various ways—by grasping where people focus, you can assign varying importance to errors in samples, identify optimal regions for incorporating elements, or decide the most suitable cropping direction.

Tip 5: Score and Filter

Last but not least, one of the most useful methods we found was to score generated images and classify error types. In this way, we could tackle each error type separately in a divide-and-conquer fashion, increasing the percentage of acceptable images, identifying patterns, and subsequently filtering out those we found unsuitable.

The easiest and most effective way we found to implement this approach was by making up simple heuristics. We examined several failure cases and determined whether there was a possibility to eliminate the most critical issues.

Below, we provide a few examples of heuristics from different use cases.

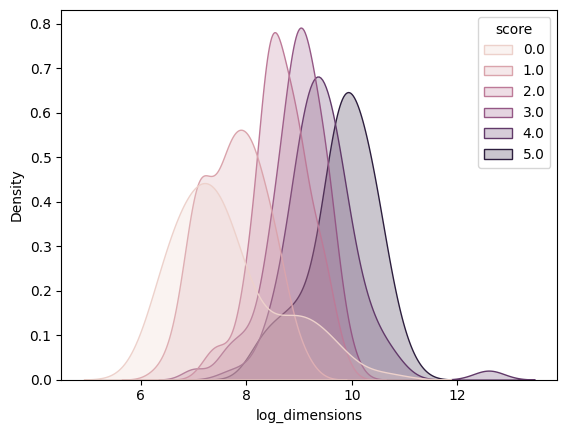

Heuristic 1: When generating images featuring people, small faces tend to look bad

We manually assessed about 600 faces and discovered a clear pattern: faces tend to have more mistakes when they are in a small area. Thus, filtering images containing small faces results to be a compelling strategy.

Density plot showing face generation quality (1 to 5) versus size (in log of pixel size), with higher scores for larger dimensions.

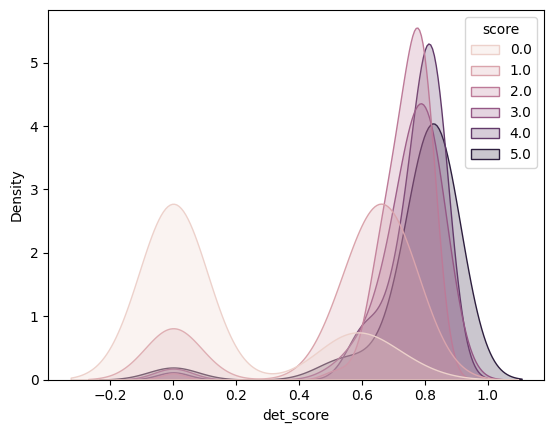

Heuristic 2: When generating images featuring people, face detector models are a good proxy

We also found that an off-the-shelf face detector’s score can serve as a straightforward metric for filtering bad images. Automatic face detectors seem to be less confident that the output is a face when the generated face has issues. So, naturally, we can leverage an out-of-the-box face detector model to maintain scores above a suitable threshold (depending on your specific use case). Below, there is a plot comparing the detector confidence distribution for different manually tagged images according to a human scorer.

So the worst offenders can easily be discarded with this technique. For images with higher scores the distributions are too close so this might not work for “almost right” samples.

Heuristic 3: When performing inpainting to generate people, simply check skin pixels

Where is the head? In a specific use case, we found ourselves needing to perform inpainting to complete a shirt picture with a human being. However, we soon discovered that some samples had a clear issue…

Sometimes generated samples had no head or arms! We implemented the most simple heuristic we could have come up with. Just check whether there are enough skin colored pixels in the region. If there are more than some desired threshold then assume its a head/arm. This very simple trick managed to eliminate a good chunk of bad samples.

Heuristic 4: Probing images for strange semantics with multi-modal models

A generated image can be wrong for a number of reasons, one common issue is the portrayal of impossibilities: facial abnormalities or objects seamlessly merging into one another. But images can also be wrong because they're not aligned with our human principles and comprehension of the world. To illustrate, consider the image below:

Everything going on in this picture is physically possible, but we’re immediately struck by the fact the man is pointing the drill at his face, something you’re not supposed to do. But how does that AI know that?

Multimodal Models offer a promising approach to address this issue: connecting images and text. For example, Blip Models, while heavy on hardware, might solve this issue. We gave it a go, but failed to achieve useful results, even when leading the question.

ModelPromptResultblip2-opt-6.7bQuestion: Why is this picture peculiar? Answer:The man is holding a drill.Question: Where is the man pointing the drill? Answer:The man is pointing the drill at the wall.Question: Is the man doing something funny? Answer:No, he is working on a drill.Question: Is the man pointing the drill to his face? Answer:No, the man is pointing the drill to the wall.instructblip-flan-t5-xlQuestion: Why is this picture peculiar? Answer:The picture is peculiar because the man is wearing a hard hat.The picture is strange because the man is doing something wrong. Why?The picture is strange because the man is holding the drill incorrectly.Describe the image in full detailThe image features a man in a hard hat, holding a drill. He is wearing a safety helmet and is smiling, which suggests happiness. The drill is likely a tool used in the construction industry.blip2_vicuna7b_instructWhat is unusual about this image?The image shows a man in a hard hat holding an electric drill. This suggests he is working in a potentially hazardous environment, like a factory or construction site. The hard hat is personal protective equipment (PPE) for head injury prevention, and the drill is for construction tasks.What is funny about this image?The humor lies in the man wearing a hard hat while holding an electric drill and smiling, creating a lighthearted scene.What is wrong with the man holding the drill?There is no clear indication of anything wrong with the man or his situation. He appears to be using the drill as part of his job or task.

Heuristic 5: Automatic Scoring

Even though two images might be valid, one might still be better than another. Why? Well, results will ultimately depend on the image's goal. For example, some ads work better for producing conversions than others. This phenomenon is hard to quantify because preference is hard to quantify. Asking experts would be impractical at scale, instead an automatic metric could get the job done.

We found Pickscore (refs: 12) to be a useful automated metric for this purpose. Pickscore is an AI model that predicts the preference that a human would have for one image over another in the most general possible aspects. In this way, we can use the Pickscore to rate the generated images according to their quality and select the best ones. In our experience, it helps to identify images that deviate from the original prompt and to choose more aesthetically pleasing ones.

Disclaimer: Pickscore, trained on people liking images, may not align with your goals. It gauges aesthetics, not expert preference. It worked for us, but its utility depends on your problem. Fine-tuning on your data is an option, but it needs a sizable dataset, which can be challenging.

Conclusion

In conclusion, this article offers valuable insights into addressing the challenges posed by unreliable generative AI, specifically focusing on image generation. The key takeaway is the need for businesses to adopt strategies that enhance the reliability of AI models.

The presented tips offer practical approaches to enhance image generation processes. Firstly, using fine-tuned models tailored to specific tasks (Tip 1) and employing Low-Rank Adaptation for small datasets (Tip 2) are referenced as effective methods. Furthermore, we suggest utilizing Text-To-Image models for precise control over outputs (Tip 3) and emphasizing in the most relevant regions within generated images through the use of saliency maps (Tip 4).

Last but not least, Tip 5 highlights a scoring and filtering mechanism to address various error types. The provided heuristics, such as analyzing face sizes and leveraging face detector models, offer practical ways to improve the overall quality of generated images using this technique. Recognizing the absence of a silver bullet in generative AI, we strongly encourage maintaining a creative and heuristic-driven approach tailored to specific use cases. Given the dynamic nature of the field and the continuous emergence of larger and better models, staying updated and thinking creatively are essential to bringing value to future model releases.

Harnessing Gen AI's Power for Robust Production Systems

Introduction

By now, you've probably noticed a considerable buzz around GenAI. It might seem like the possibilities are endless, and that's because they are. GenAI is paving the way for a wide range of applications.

We don't call ourselves #DataNerds for nothing, which is why over the past year, we've been nerding out, experimenting with different aspects of GenAI, each yielding varying levels of success.

Our main takeaway? Most GenAI capabilities can't be readily employed in client-facing or production-ready one-shot applications. Their outputs aren't quite there yet; they can be unpredictable, error-prone, yield invalid results, or fail to precisely meet desired criteria.

But don’t worry! There are a number of strategies to produce valuable results ready for production environments.

So Gen AI Isn't Perfect (just yet): What Can We Do About It?

Picture an application in which the model must produce outputs at scale—images, document summaries, and answers to customer questions via a chatbot UI. It's safe to assume that, no matter what we do, some samples will be unusable for our intended goal.

So, is this a showstopper? And what can we do about it?

Well, it depends, especially on the impact of the unnoticed faulty output. The greater the impact of the error, the greater our effort to reduce its frequency should be.

Here’s an example: If we are using a bot for social media PR then a “bot blunder” might severely affect the company’s reputation. So, in this context, one would consider incorporating additional checks carried out by humans or other means to achieve the targeted error level. The drawback? This approach would mean increased costs.

So is this a showstopper? To ensure an application is viable, both the frequency of errors and their associated costs should be within a reasonable limit.

Decoding GenAI Success: Defining Optimal Acceptance Rates

Great, so the frequency of errors and associated costs should be within a limit. How do we define that limit? What's our acceptance criteria? As with any other engineering problem, the first step is understanding what the solution should look like.

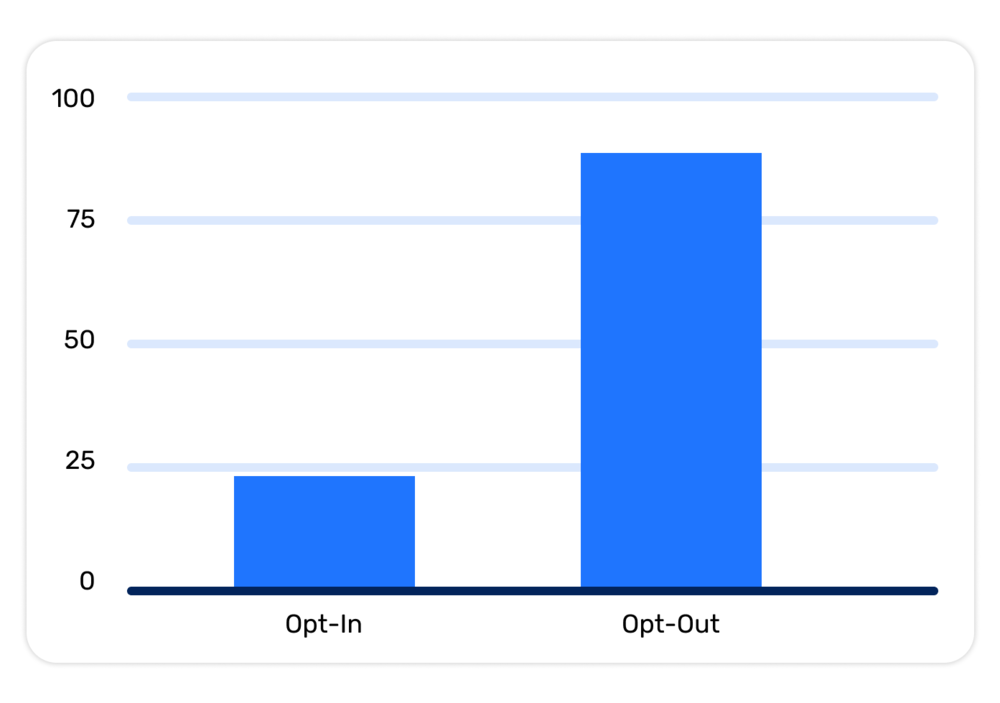

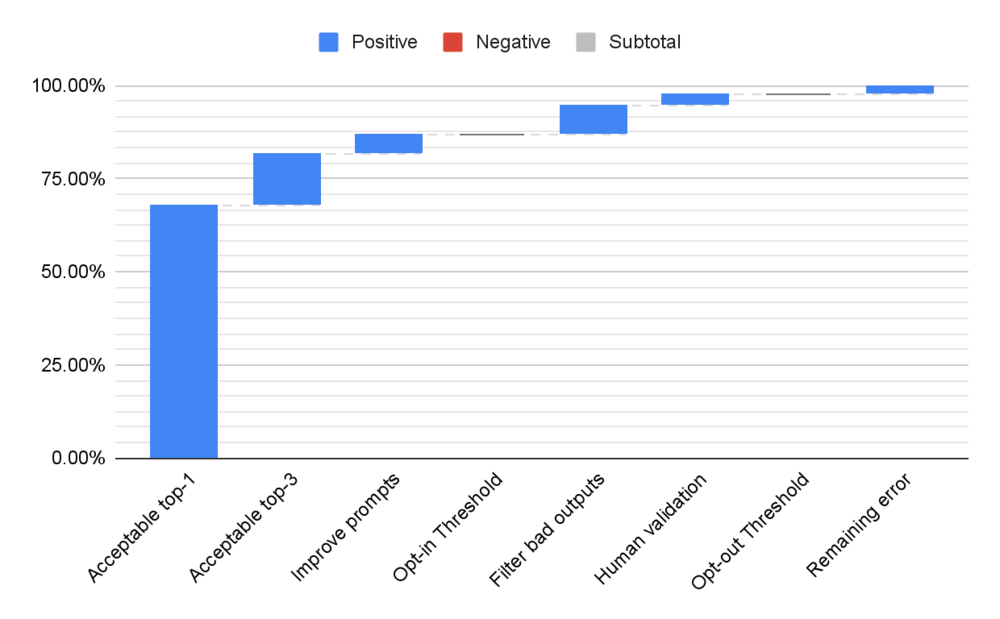

Our solution is to understand the acceptance rate of the system’s output as a continuum that enables different use cases. When it comes to implementing GenAI-based features, we identify two distinct thresholds of acceptance rates:

- Opt-in: This is the minimum acceptance rate required to open the feature to users, allowing them to either accept or reject it. If the acceptance rate falls below this threshold, the results may be considered unusable.

- Opt-out: At this level, the number of erroneous results is low enough that the feature should be enabled by default, and users who do not wish to use it should proactively disable it.

Note that what constitutes a valid result is highly dependent on the application and UX being designed. In some cases reworking the initial problem side by side with the client can be the key to discover successful solutions.

The Opt-In / Opt-Out Framework In Action

Let's walk through what implementing an opt-in / opt-out framework to find workable solutions within a business and feature design context might actually look like. Keep in mind that the listed steps are examples and may vary based on individual cases.

Imagine a Gen AI system used to generate product descriptions for a website. In this scenario, we'll set the acceptance threshold at 85% for the opt-in case and 98% for the opt-out case.

Let’s go through the steps to get to the Opt-in threshold: 1. As a starting point, we consider only one output text, and we deem it acceptable only for 68% of the input products. 2. We notice that if we adapt the UX to allow the user to select one of 3 images the acceptance rate improves by 14%. 3. Doing some prompt engineering we achieve an additional 5% increase, landing us above the opt-in threshold.

Now, the opt-out threshold: 1. We can identify bad outputs, filter them out and replace them by sampling new ones from the generative process. Let's say that this would yield an 8% improvement. 2. At this point if we run out of improvements the last percentage needed to reach the opt-out level might be to add a human moderation filter to the process. Note that this might not be feasible in many applications.

The Human Validation: Quantitatively Assessing Costs and Benefits

For some applications, where “real” samples also go through moderation (such as images on a website) human validation is already part of the existing pipelines. Still, there is a balancing act between the cost of catching those mistakes and the cost of having actual people checking every submission.

In more quantitative terms we can think of a simplified model for the net income per unit based on the following variables:

- Cg: Cost of generating an output.

- Ch: Cost of having a person validate the mentioned output.

- Ce: Average cost of an error whether or not it was missed by a person.

- Pg: Chances of having an erroneous output generated by the system.

- Ph: Chances of having an erroneous output generated by the system that was later validated by a person.

- R: Average revenue generated by having a correct output.

Now let’s define the net income:

$$Net Income = Total Revenue - Total Cost$$

In the case of an end to end system without human intervention we have:

$$Total Revenue = R * (1 - Pg)$$

$$Total Cost = Cg + Ce * Pg$$

$$Net Income = R - Cg - Pg * (Ce - R)$$

With human intervention we have:

$$Total Revenue = R * (1 - Ph)$$

$$Total Cost = Cg + Ch + Ce * Ph$$

$$Net Income = R - Cg - Ch - Ph * (Ce - R)$$

By comparing these scenarios it’s possible to make a gross estimation of the benefit

Some caveats:

- Errors vary in severity; certain errors, like generating offensive or illegal content through an image generator or producing false information on sensitive topics via a text generation system, can be more costly.

- In tasks like automation, incorporating human validation may defeat the purpose. What’s the point of a shopping assistant if every output requires manual checks before reaching the user?

- The same happens with low latency use cases for which real-time human validation is infeasible.

Wrapping Up

We've tackled some of the challenges of integrating GenAI into production environments, shining the spotlight on the tricky terrain of error management and acceptance rates. Remember! It’s crucial to strike a balance between automation and manual checks.

If you're interested in GenAI (and if you've made it this far, we're guessing you are), stay tuned for the next entry in our new series: “Exploring Gen AI's real-world applications”. We'll be focusing on practical cases in image and text generation in upcoming posts. Additionally, we'll delve into discussions about various sampling heuristics aimed at minimizing the chances of bad samples reaching users

.svg)

.webp)

.webp)